2020-present.

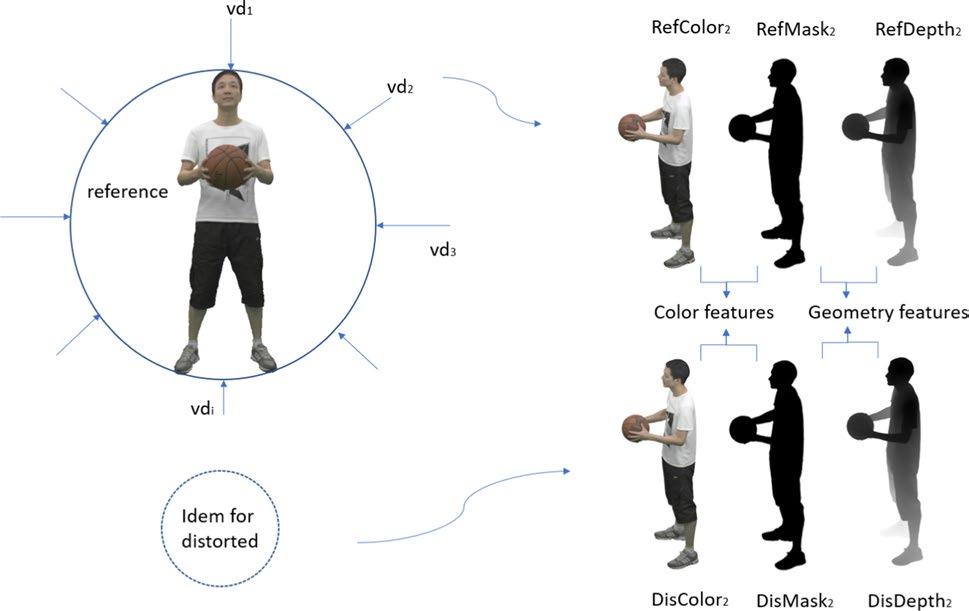

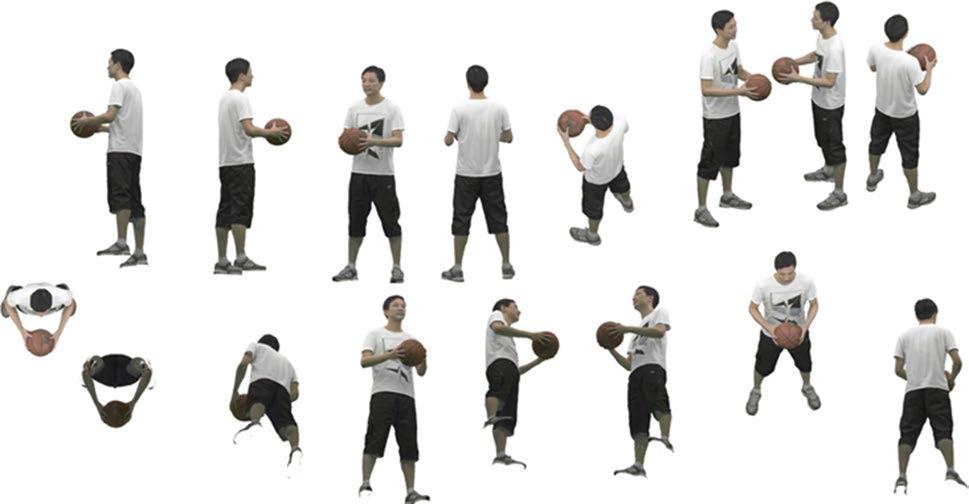

Each frame of volumetric video offers dynamic viewing from any perspective, making it particularly suitable for AR and VR applications where users can freely rotate around the subject. The Basketball model from the MPEG dataset, courtesy of Owlii, serves as an illustrative example. These volumetric videos can be represented using point clouds or textured meshes.

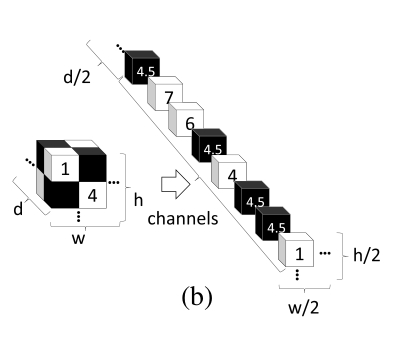

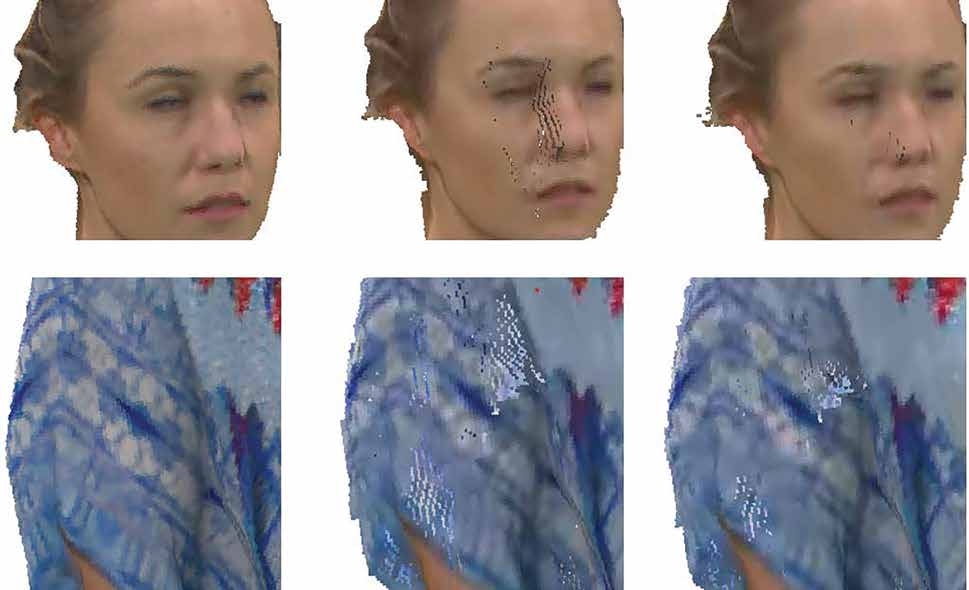

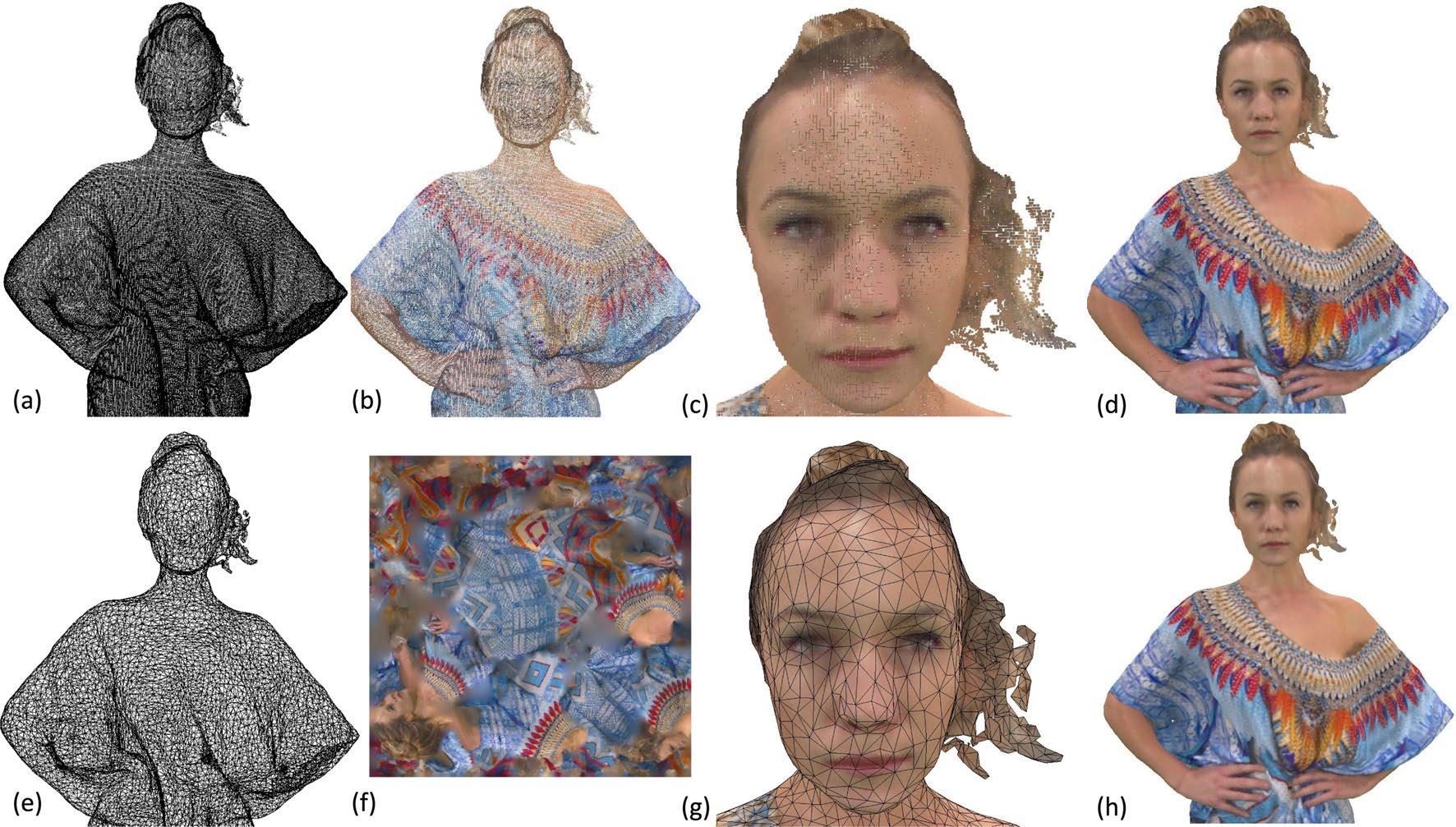

A 3D point cloud serves as a discrete collection of points in 3D space (a). Each point can be assigned a color (b). Direct rendering of a point cloud results in non-continuous surfaces (b, c), hence point clouds often demand a substantial number of points for rendering (e.g., millions). Splatting techniques address this issue by filling the gaps between points during rendering (d). A 3D mesh, on the other hand, comprises vertices (3D points), edges, and faces that define a polyhedral surface (e). Faces commonly consist of triangles. Color images known as texture maps (f) can be applied to these triangles to enhance the visual appeal of the surface (g). The final rendering of a textured mesh produces a continuous surface (h). The Longdress model from the MPEG dataset, courtesy of 8i, serves as an illustrative example.

MPEG V-DMC is an ongoing project aiming to compress animated sequences of textured meshes with dynamic topology and texture atlas. The codec utilizes video compression techniques to streamline the process. The specification is expected to be published in 2024.

MPEG V-PCC, a similar project, has already been finalized and the specification is available. It focuses on compressing time-varying point clouds.

In 2024, an AI prospection within the MPEG consortium (PCC-AI) will initiate a call for proposals for the compression of dynamic colored point clouds using AI technologies.